Tuesday, March 19, 2013JTAG Communication by JavaThe new target board DE0-Nano enables JTAG communication using download cable to communicate with host PC only by its embedded functions. DE0-Nano has a USB Blaster-compatible circuit in its body, and so allows host PC to access the board itself simply using the driver of USB Blaster. As this compatible circuit uses FT245BL produced by Future Technology Devices International Ltd. (FTDI), the driver of USB Blaster must be FTDI’s D2XX driver actually. As proof of this, it is possible to access the board just using a DLL file (ftd2xx.dll) attached to D2XX driver. The next point to be discussed is what type of data should be exchanged. Regarding this, details are available in a document relating to UrJTAG. In short, data are clammed in USB packets no longer than 64 bytes and sent out in two kinds of formats, either bit banging mode, which enables controlling all JTAG signals, or byte shift mode capable of high-speed data communication. Then, the next question is how to establish communication with host PC by JTAG. The simplest way is probably to use Virtual JTAG Megafunction for on-chip debugging.

This figure shows connecting input and output pins to sld_virtual_jtag megafunction by schematic editor of Quartus II software. For here, we input and output 8-bit data, and so set the parameter sld_ir_width to 8 and sld_auto_instance_index to “YES” just in case. Connect the input pins key[7..0] to ir_out, and the output pins led[7..0] to ir_in. If you don’t want such a hassle to input codes, here you can have the block design file (jtag_led.bdf) and the sof file (jtag_led.sof) finished with logic synthesis. However, you need to specify the pin numbers and the device (EP4CE22F17C6) in case of the block design file. Write the above-shown circuit into the DE0-Nano beforehand, and execute the below-mentioned Java program on the JNA pre-installed environment. I confirmed the working only in the Windows XP environment, but probably it will work in any Win32 environment. Though it isn’t tested yet in a 64-bit environment, I expect it to work just by changing the DLL file name: “usbblstr32” –> “usbblstr64”.

import com.sun.jna.*;

import com.sun.jna.ptr.*;

public class JTAG_LED {

static native int FT_OpenEx(String pArg1, int flags,

PointerByReference pHandle);

static native int FT_Close(Pointer ftHandle);

static native int FT_Write(Pointer ftHandle, Pointer lpBuffer,

int dwBytesToWrite, IntByReference lpdwBytesWritten);

static native int FT_Read(Pointer ftHandle, Pointer lpBuffer,

int dwBytesToRead, IntByReference lpdwBytesReturned);

static {

Native.register("usbblstr32");

}

Pointer ftHandle = null;

public JTAG_LED(String description) {

PointerByReference pHandle = new PointerByReference();

if (FT_OpenEx(description, 2, pHandle) != 0) {

throw new RuntimeException("FT_OpenEx failed.");

}

ftHandle = pHandle.getValue();

}

public void close() {

FT_Close(ftHandle);

}

Memory memory = new Memory(64);

IntByReference ref = new IntByReference();

public static final short L = 0x2D2C;

public static final short H = L | 0x1010;

public static final short TMS = L | 0x0202;

public static final short TMS_H = TMS | H;

public static final short OFF = 0x0D0C;

public void write(short... data) {

memory.write(0, data, 0, data.length);

FT_Write(ftHandle, memory, data.length << 1, ref);

}

public static final int WR = 0x81;

public static final int RD = 0xC1;

public static short byteshift(int data, int mode) {

return (short) ((data << 8) | mode);

}

public byte read() {

FT_Read(ftHandle, memory, 1, ref);

return memory.getByte(0);

}

public static void main(String[] args) throws InterruptedException {

JTAG_LED jtag = new JTAG_LED("USB-Blaster");

jtag.write(TMS, TMS, TMS, TMS, TMS); // TEST_LOGIC/RESET

jtag.write(L, TMS, TMS, L, L); // SHIFT_IR

jtag.write(byteshift(0x0e, WR), L, TMS); // USER1 instruction

jtag.write(TMS, TMS, L, L); // SHIFT_DR

jtag.write(byteshift(0, WR), TMS_H, TMS, TMS, L, L); // Dummy write

int lfsr = 1;

for (int b = 1; (b & 1) != 0;) {

jtag.write(byteshift(lfsr, RD), TMS_H, TMS, TMS, L, L);

if (((lfsr <<= 1) & 256) != 0) lfsr ^= 0x171;

b = jtag.read() & 255;

System.out.println(Integer.toBinaryString(b));

Thread.sleep(((b & 2) != 0) ? 10 : 100);

}

jtag.write(byteshift(0, WR), TMS_H, TMS, OFF);

jtag.close();

}

}

Once the circuit works successfully, though this picture may not show it well, LED will start flickering rapidly. The flickering is patterned by LFSR, and so not totally randomized. It sometimes looks as if flowing to the left. The flickering gets slower by pushing the left push-button. The program stops and LED goes off by pushing the right push-button. When Java program is executed on the console, it displays the condition of input pins in binary to let us check the conditions of push-buttons and DIP switches. All these operations are regulated by host PC. As a proof, you will see LOAD LED (D4) on during operation, while the light is off at other times.

This sample program is to apply the virtual instruction register (VIR) of sld_virtual_jtag megafunction for data communication, which is, indeed, a very extraordinary use. The actual processes of writing VIR are setting USER1 (0000001110) to instruction register (IR) of JTAG and then setting data for transmission and instance ID to data register (DR). As instance ID surely becomes one-bit-long “1” upon generating only one instance of megafunction, data plus “1” needs to be sent. Though JTAG-related circuit have individual clock domain and therefore require care to metastability, DCFIFO is just a “twofer” to clear the requirement and to mount FIFO. |

Saturday, March 16, 2013Target Board DE0-NanoNow I’d rather stop carrying logics on and on, and go for making a real circuit. The few but crucial shortcomings of this board are that it has only on-board 8 LEDs for displaying and that it allows access from the JTAG configuration cable as the basically only way to communicate with host PC. Compare to Arduino enabling serial communication just with a download cable…. As logic synthesis for multi-core processor takes a certain amount of time, it makes no sense if we have to do it again from the beginning every time we renew a software — the free Web Edition doesn’t support incremental compilation yet. If the board can communicate with host PC, it enables downloading software without logic synthesis, greatly enhancing efficiency in development work. Furthermore, if the board can communicate with custom application software in real-time, it will expand the sphere of what we can apply this technology to. Once I was almost disappointed at finding only such desperate efforts as to forcefully redirect console I/O of the integrated environment through the web. I’m going to upload this kind of information from time to time…. |

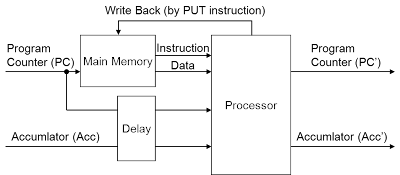

Tuesday, February 12, 2013Designing MultiprocessorNow, let’s begin with designing a circuit of accumulator machine, the very fundamental element for register-less architecture. Draw a rough block diagram along data flow. Accumulator machines basically flow only program counter (PC) and Accumulator (Acc). Other data such as status flags are left out here.

Processor reads instruction and data at a time from main memory based on present internal state (PC, Acc), executes an operation such as arithmetic or jump instruction, and updates internal state to (PC’, Acc’). It also updates main memory at the same time in case of executing a PUT instruction. Connect the new internal state output to the input of present internal state, and just a single processor is done — so easy, isn’t it? To be timed with output of memory access, internal state signals are to be delayed for the same clock number and then input to the processor. As arithmetic instruction and jump instruction can be executed as changes in accumulator and program counter, respectively, the processor basically needs to have only a combinational circuit internally. And CPI (Clocks per Instruction: the number of clock cycle per instruction execution) can be set to 1 on the conditions listed below.

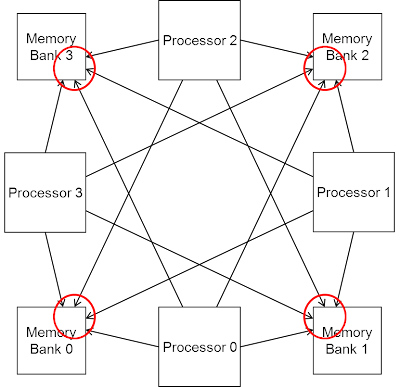

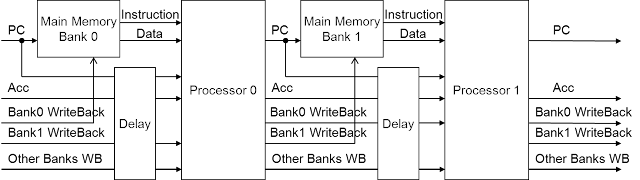

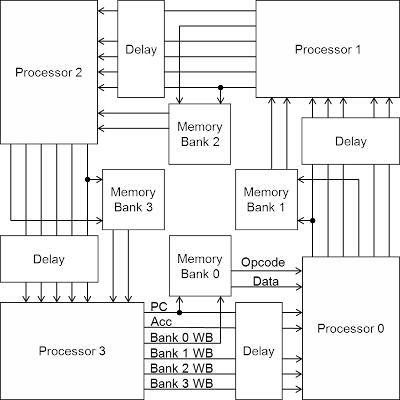

These conditions are not so difficult, as latest embedded memories for FPGA are usually dual ported and enable one-clock access. It is an earnest wish in processor architecture to achieve 1 CPI, and to exceed this, complicated techniques are required such as instruction prefetching and instruction-level parallelism. Therefore, it is better to keep the structure of each processor element as simple as this and to leave performance improvement to multiplying processor cores. Join a number of these processors at inputs and outputs of their internal state in a ring, and a multiprocessor is done. Though main memory is divided into banks and assigned to each processor, this whole structure alone brings a problem of confining processor’s writing by PUT instruction only to its own bank. It could be an idea to use this mechanism in disregard of such inconvenience, but then there is no other tool for data transfer than accumulator, which is not only inconvenient but limits processing performance due to lack of inter-processor communication bandwidth.

Actually, such a ring-structured (network topology) multiprocessor has an advantage of making circuits simple but has a hardness for random access. The system preferably enables PUT instruction, which executes random access writing, as writing to other banks is an important indirect inter-processor communication. However, a naive way of connecting write signals from every processor to all banks requires each bank to have as many ports as the processors and ruins the simple ring structure.

In fact, we can evade the problem of random access writing by loosening memory coherence requirement. For example, even though writing on a hard disk is difficult to achieve promptly due to head seek and rotating time, computers cannot afford to halt until its achievement. Most operating systems clear this problem by queueing data in memory and writing later all at once. Here for our multiprocessor as well, there would be no difference in its actual results even if writing to bank 0 is suspended until next time the bank 0 is read. Therefore, the system here takes the approach of suspending writing data to another bank until a program (or a thread) self reaches the destination bank.

This approach assures a program’s retrieving what it has written in a bank. However, what another program writes in is not promptly reflected in memory contents. Speaking of memory coherence, this approach sustains memory coherence within a program (or a thread) but not for between plural programs (or threads). Nevertheless, all write backs are to be reflected in memory contents within the writing program walk around all the processors. Therefore, implementing programs with consideration to the delay of write backs would cause no problem.

The diagram shows a design with 4 processors, as an example. While this design results in a bit complicated lines along with the increase of write back signals, it guarantees enough bandwidth of data transfer between processors, and moreover, needs every bank only to be dual ported. However, there are much more points to be considered regarding to random access reading. To be continued… |

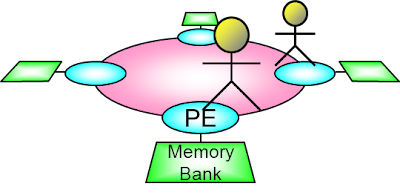

Tuesday, January 22, 2013Accumulator Machine & Self-modificationMultiprocessor system based on register-less architecture can be illustrated in the image below as if tasks run themselves while going round and chasing each other through processor elements.

Inner state information of each task should be as simple and light as possible to move between processors, requiring employment of architecture minimizing register. The most typical of such architecture is accumulator machine. For example, accumulator machine executes such a form of instruction as shown below. ADD memory_address The actual processing this instruction does is to add the value of memory at location memory_address to the value of accumulator Acc, the only general-purpose register of the processor. Acc <= Acc + (memory value at memory_address) While being quite useful for downsizing circuit, accumulator machine is not suitable for speeding up because of increasing memory access. To do “ADD memory_address,” for example, accumulator machine requires at least twice access to memory: once to read the ADD instruction and memory_address and the second time to read the value of memory at the memory_address. Accumulator machine has been almost abandoned as being out of date against current development in processors to minimize memory access. However, except this weak point, accumulator machine has preferable properties to be an element of small-scale multiprocessor. Therefore, I am introducing a technique of equipping small-scale multiprocessor with improved accumulator machine. If processor has register other than accumulator, reading register can replace once of memory access by specifying register number instead of memory address: ADD register_number {Acc <= Acc + (register value)} Even so, reading register value is possible only after having register number fixed and therefore impossible at the same time of reading instruction. Actually, an instruction is executed through at least four stages:

Most of current processors achieve high performance by simultaneously running these stages through pipelining. In reality, however, this technique requires various kinds of efforts to perform well, causing current processors to be complicated. After all, this kind of problem is inevitable, even by using register, unless parameters necessary to run instruction (in case of addition, both left-hand side and right-hand side) are fixed at the same time that the instruction is retrieved. The only solution is to limit instruction parameters to fixed values (immediate/literal). In ADD instruction, it goes like this: ADD immediate {Acc <= Acc + (immediate value)} Processor needs to access memory only once, if it can read instruction and parameters at the same time. Then it goes to addition instantly, and basically has no need for complicated pipeline processing. Of course, programs only with fixed parameters make processor nothing but useless, however, this is solvable by self-modifying code. That is, to be more specific, PUT instruction to replace parameter of instruction at the address indicated by parameter of the PUT instruction with current value of accumulator: PUT instruction_address {[instruction_address] <= Acc} As the parameter of PUT instruction itself is rewritable by another PUT instruction, array and other indirect addressing are also available. PUT instruction makes parameters of every instruction available as general-purpose registers in fact. Self-modification of codes is a rarely-used technique in current processors except special cases. Not only such issues like security and memory protection, but the biggest negative reason seems to be that code’s self-modifying process is quite inefficient in cache memory and instruction pipeline. Register-less architecture spotlights and employs aggressively this out-of-the-mainstream technique of self-modification. That is, register-less architecture is what revives non-mainstream techniques of accumulator machine and self-modifying as elements of small-scale multiprocessor. To be continued… |

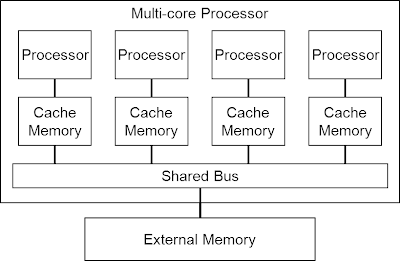

Friday, January 18, 2013Multiprocessor & Memory AccessIn recent years, multi-core processors are loaded so commonly in notebook PCs and even mobile devices. And now, even smartphones with quad-core are circulating in the world. Then, why not add more cores? — But it doesn’t go that easily. While there is an argument called Amdahl’s law due to the fact that programs in the world cannot always be parallelized, even if they could be, the lack of memory access throughput inevitably holds down processing performance (Von Neumann bottleneck). In computing, a CPU needs the process of reading a program and data from memory and then writing results to memory. No matter how fast CPU alone operates, it cannot do much as long as it cannot reach memory at the same speed. So, how are current multiprocessors addressing this problem?

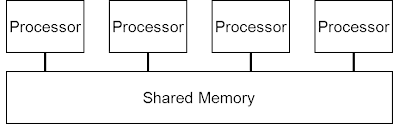

Most multiprocessors in the world are basically configured to have distributed cache memory as shown in the figure above. In this configuration, every processor copies necessary parts of programs and data into cache memory to use, drastically reducing the need for access to shared memory. Usually, cache memory and shared bus run much faster than external shared memory, a multi-core processor enhances its performance along with the increase of its internal processor cores. Since memory access directly determines the performance of processor, cache memory is the component consuming the largest area and most electric power in processor. Cache memory has the original role of covering the difference in the speed of external memory and the inside of processor, but has become indispensable to simultaneous access to memory in multi-core processor due to cache coherency. But in processor with chip-embedded memory, cache memory is of course waste and useless. Then, how can a small-scale multiprocessor with embedded memory be composed? — Firstly, by force.

The use of multiport memory theoretically enables direct connection of shared memory and processors. However, it reduces area efficiency (necessary area is said to be proportional to the square of port number) and is unavailable in a FPGA, which usually has up to two ports. Another choice is to assign local memory to each processor and have communication bus, instead of giving up shared memory. This format facilitates designing hardware and so is relatively common — For example, the Cell processor of PS3 has a similar structure.

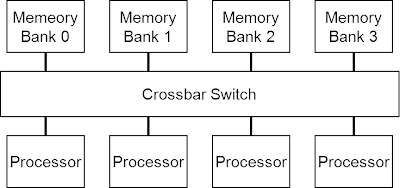

Despite of easy designing hardware, designing software is all the harder. Particularly in small-scale processors, it is out of question to divide already scarce main memory to each processor. The system could be also designed to allow a processor to access local memory of other processors somehow, which, however, seems to be inefficient for its complexity. Another structure is possible by dividing shared memory not per processor but to memory banks corresponding to address areas.

Crossbar switch enables simultaneous access as long as each of processors aims at a different memory bank. But when some processors try to access the same memory bank, all except one are blocked from access to the bank. The problem is that the increasing number of processors rapidly complicates crossbar mechanism. There has been lots of research on how to simplify the mechanism since early times. (Like this study, for example) It brings interesting results if you divide memory into banks by the remainder of each address divided by the number of processors. In the figure attached above, for example, memory banks numbered as 0 through 3 mean the memory areas tagged with the remainders from 0 through 3 of addresses divided by 4. At one point in time, if four processors have execution addresses 100, 201, 302 and 403, respectively, the remainders of these addresses divided by 4 are 0, 1, 2 and 3, thus allowing processors to fetch operation code from respective corresponding memory banks simultaneously. At a next point, execution addresses become 101, 202, 303 and 404, and the remainders are 1, 2, 3 and 0, again enabling simultaneous access to respective memory banks. Likewise, four processors sustain the situation of simultaneous accessibility unless any of them executes a jump instruction. If a group of processors can be shifted in this situation, there would be no need for crossbar mechanism at all. That said, it is impossible to physically rotate processors within an IC, and therefore, the actual method is to transfer entire internal state of every processor by a chain of bus connections.

Since processor’s internal state should naturally include values of all registers, the values as many as the registers a processor has must be sent out to next processor. That means the less registers a processor has, the more efficient implementation could be. This point finds out the feasibility of small-scale multiprocessor based on register-less architecture. To be continued… |