Friday, January 18, 2013Multiprocessor & Memory AccessIn recent years, multi-core processors are loaded so commonly in notebook PCs and even mobile devices. And now, even smartphones with quad-core are circulating in the world. Then, why not add more cores? — But it doesn’t go that easily. While there is an argument called Amdahl’s law due to the fact that programs in the world cannot always be parallelized, even if they could be, the lack of memory access throughput inevitably holds down processing performance (Von Neumann bottleneck). In computing, a CPU needs the process of reading a program and data from memory and then writing results to memory. No matter how fast CPU alone operates, it cannot do much as long as it cannot reach memory at the same speed. So, how are current multiprocessors addressing this problem?

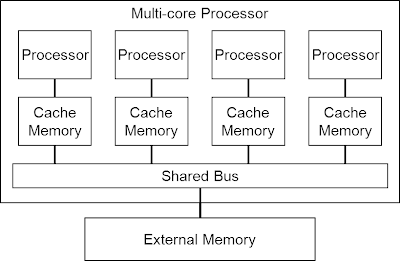

Most multiprocessors in the world are basically configured to have distributed cache memory as shown in the figure above. In this configuration, every processor copies necessary parts of programs and data into cache memory to use, drastically reducing the need for access to shared memory. Usually, cache memory and shared bus run much faster than external shared memory, a multi-core processor enhances its performance along with the increase of its internal processor cores. Since memory access directly determines the performance of processor, cache memory is the component consuming the largest area and most electric power in processor. Cache memory has the original role of covering the difference in the speed of external memory and the inside of processor, but has become indispensable to simultaneous access to memory in multi-core processor due to cache coherency. But in processor with chip-embedded memory, cache memory is of course waste and useless. Then, how can a small-scale multiprocessor with embedded memory be composed? — Firstly, by force.

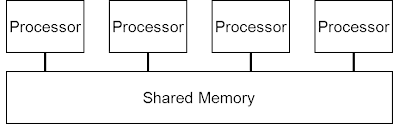

The use of multiport memory theoretically enables direct connection of shared memory and processors. However, it reduces area efficiency (necessary area is said to be proportional to the square of port number) and is unavailable in a FPGA, which usually has up to two ports. Another choice is to assign local memory to each processor and have communication bus, instead of giving up shared memory. This format facilitates designing hardware and so is relatively common — For example, the Cell processor of PS3 has a similar structure.

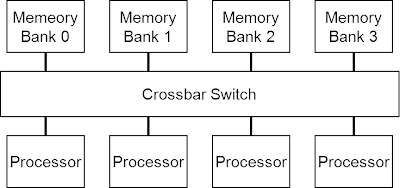

Despite of easy designing hardware, designing software is all the harder. Particularly in small-scale processors, it is out of question to divide already scarce main memory to each processor. The system could be also designed to allow a processor to access local memory of other processors somehow, which, however, seems to be inefficient for its complexity. Another structure is possible by dividing shared memory not per processor but to memory banks corresponding to address areas.

Crossbar switch enables simultaneous access as long as each of processors aims at a different memory bank. But when some processors try to access the same memory bank, all except one are blocked from access to the bank. The problem is that the increasing number of processors rapidly complicates crossbar mechanism. There has been lots of research on how to simplify the mechanism since early times. (Like this study, for example) It brings interesting results if you divide memory into banks by the remainder of each address divided by the number of processors. In the figure attached above, for example, memory banks numbered as 0 through 3 mean the memory areas tagged with the remainders from 0 through 3 of addresses divided by 4. At one point in time, if four processors have execution addresses 100, 201, 302 and 403, respectively, the remainders of these addresses divided by 4 are 0, 1, 2 and 3, thus allowing processors to fetch operation code from respective corresponding memory banks simultaneously. At a next point, execution addresses become 101, 202, 303 and 404, and the remainders are 1, 2, 3 and 0, again enabling simultaneous access to respective memory banks. Likewise, four processors sustain the situation of simultaneous accessibility unless any of them executes a jump instruction. If a group of processors can be shifted in this situation, there would be no need for crossbar mechanism at all. That said, it is impossible to physically rotate processors within an IC, and therefore, the actual method is to transfer entire internal state of every processor by a chain of bus connections.

Since processor’s internal state should naturally include values of all registers, the values as many as the registers a processor has must be sent out to next processor. That means the less registers a processor has, the more efficient implementation could be. This point finds out the feasibility of small-scale multiprocessor based on register-less architecture. To be continued… |